Spring AI - Ollama with AI Agent

Overview

Spring AI - Ollama (Chat Model)

Github: https://github.com/gitorko/project09

Spring AI

Ollama is a platform designed to allow developers to run large language models (LLMs) locally.

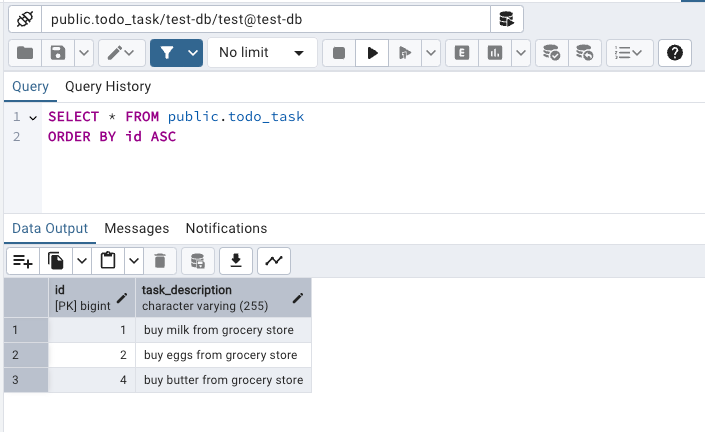

In this example we will run the llama3.1 LLM model which will run locally and write an AI agent that can interact with the postgres database to create a TODO task application.

Code

1package com.demo.project09.controller;

2

3import com.demo.project09.agent.ChatAgent;

4import com.demo.project09.agent.TodoAgent;

5import org.springframework.beans.factory.annotation.Autowired;

6import org.springframework.web.bind.annotation.*;

7

8import java.time.Instant;

9

10@RestController

11@RequestMapping("/")

12public class HomeController {

13

14 @Autowired

15 TodoAgent todoAgent;

16

17 @Autowired

18 ChatAgent chatAgent;

19

20 @GetMapping("/time")

21 public String getDate() {

22 return Instant.now().toString();

23 }

24

25 @PostMapping("/talk")

26 public String talk(@RequestBody String message) {

27 return chatAgent.talk(message);

28 }

29

30 @PostMapping("/agent")

31 public String agent(@RequestBody String message) {

32 String chatId = "10";

33 return todoAgent.talk(chatId, message);

34 }

35}

1package com.demo.project09.config;

2

3import org.springframework.ai.chat.client.ChatClient;

4import org.springframework.ai.chat.client.advisor.MessageChatMemoryAdvisor;

5import org.springframework.ai.chat.client.advisor.QuestionAnswerAdvisor;

6import org.springframework.ai.chat.memory.ChatMemory;

7import org.springframework.ai.chat.memory.InMemoryChatMemory;

8import org.springframework.ai.chat.model.ChatModel;

9import org.springframework.ai.embedding.EmbeddingModel;

10import org.springframework.ai.vectorstore.SimpleVectorStore;

11import org.springframework.ai.vectorstore.VectorStore;

12import org.springframework.context.annotation.Bean;

13import org.springframework.context.annotation.Configuration;

14

15@Configuration

16public class ChatConfig {

17

18 @Bean

19 ChatClient chatClient(ChatModel chatModel) {

20 return ChatClient.create(chatModel);

21 }

22

23 @Bean

24 ChatClient agentClient(ChatModel chatModel, VectorStore vectorStore, ChatMemory chatMemory) {

25 ChatClient.Builder builder = ChatClient.builder(chatModel);

26 return builder.defaultSystem("""

27 You are a todo task application bot named "alexa" for the application "FunApp"

28 Respond in a friendly, helpful, and joyful manner.

29 You are interacting with customers through an online chat system where they can add, remove and get todo tasks

30 Before adding a todo task you MUST get the task description string from the user.

31 Before getting the specific todo task you need to ask the user to provide the task number.

32 Before getting tasks that the user wants you need to get the single keyword in the task from the user.

33 To delete a todo task take the task number from the user

34 To search a todo task take the keyword string from the user

35 Use the provided functions to fetch todo tasks, add todo task, remove todo tasks and search todo tasks.

36 Today is {current_date}.

37 """)

38 .defaultAdvisors(

39 new MessageChatMemoryAdvisor(chatMemory), // chat-memory advisor

40 new QuestionAnswerAdvisor(vectorStore) // RAG advisor

41 )

42 .defaultFunctions("getTodo", "addTodo", "deleteTodo", "searchTodo")

43 .build();

44 }

45

46 @Bean

47 public VectorStore vectorStore(EmbeddingModel embeddingModel) {

48 return SimpleVectorStore.builder(embeddingModel)

49 .build();

50 }

51

52 @Bean

53 public ChatMemory chatMemory() {

54 return new InMemoryChatMemory();

55 }

56

57}

1package com.demo.project09.agent;

2

3import lombok.RequiredArgsConstructor;

4import org.springframework.ai.chat.client.ChatClient;

5import org.springframework.stereotype.Service;

6

7@Service

8@RequiredArgsConstructor

9public class ChatAgent {

10

11 private final ChatClient chatClient;

12

13 public String talk(String message) {

14 return chatClient.prompt().user(message).call().content();

15 }

16}

1package com.demo.project09.agent;

2

3import com.demo.project09.domain.TodoTask;

4import com.demo.project09.service.TodoService;

5import com.fasterxml.jackson.annotation.JsonInclude;

6import lombok.RequiredArgsConstructor;

7import lombok.extern.slf4j.Slf4j;

8import org.springframework.ai.chat.client.ChatClient;

9import org.springframework.context.annotation.Bean;

10import org.springframework.context.annotation.Description;

11import org.springframework.stereotype.Service;

12

13import java.time.LocalDate;

14import java.util.function.Consumer;

15import java.util.function.Function;

16

17@Service

18@Slf4j

19@RequiredArgsConstructor

20public class TodoAgent {

21

22 public static final String CHAT_MEMORY_CONVERSATION_ID = "chat_memory_conversation_id";

23 public static final String CHAT_MEMORY_RESPONSE_SIZE = "chat_memory_response_size";

24 private final ChatClient agentClient;

25 private final TodoService todoService;

26

27 public String talk(String chatId, String message) {

28 return agentClient.prompt()

29 .system(s -> s.param("current_date", LocalDate.now().toString()))

30 .advisors(advisor -> advisor.param(CHAT_MEMORY_CONVERSATION_ID, chatId)

31 .param(CHAT_MEMORY_RESPONSE_SIZE, 100))

32 .user(message)

33 .call()

34 .content();

35 }

36

37 @Bean

38 @Description("Get Todo")

39 public Function<Void, Iterable<TodoTask>> getTodo() {

40 return request -> {

41 return todoService.getTodo();

42 };

43

44 }

45

46 @Bean

47 @Description("Add Todo")

48 public Function<TodoTask, TodoTask> addTodo() {

49 return request -> {

50 return todoService.addTodo(request.getTaskDescription());

51 };

52 }

53

54 @Bean

55 @Description("Delete Todo")

56 public Consumer<TodoTask> deleteTodo() {

57 return request -> {

58 todoService.deleteTodo(request.getId());

59 };

60 }

61

62 @Bean

63 @Description("Search Todo")

64 public Function<SearchKey, TodoTask> searchTodo() {

65 return request -> {

66 return todoService.searchTodo(request.keyword);

67 };

68 }

69

70 @JsonInclude(JsonInclude.Include.NON_NULL)

71 public record SearchKey(String keyword) {

72 }

73}

74

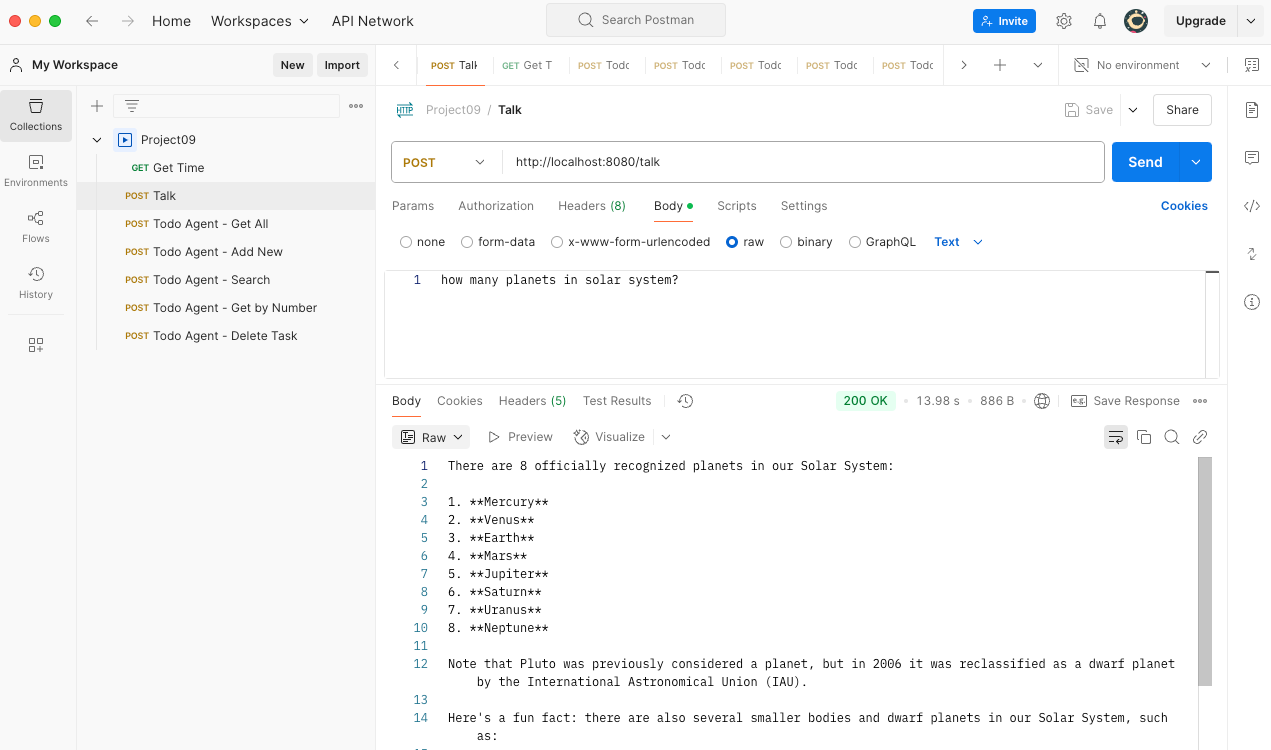

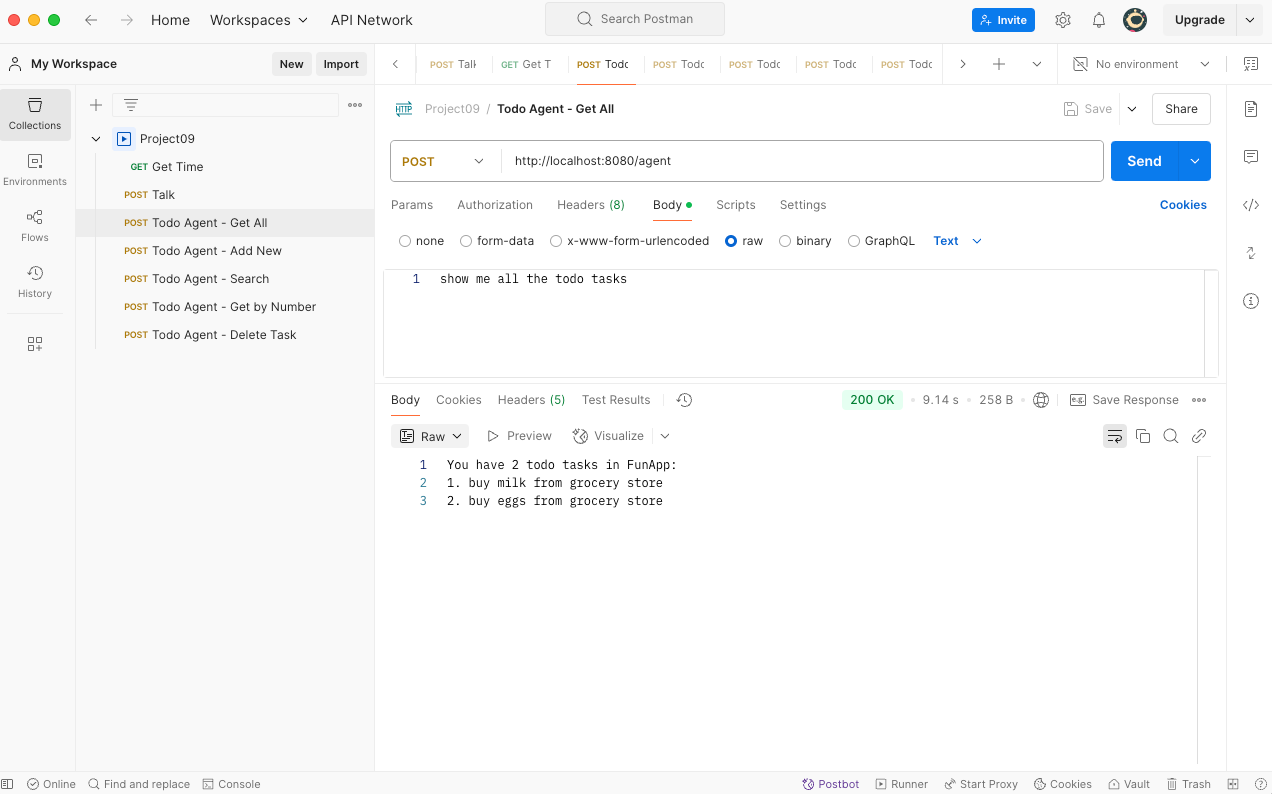

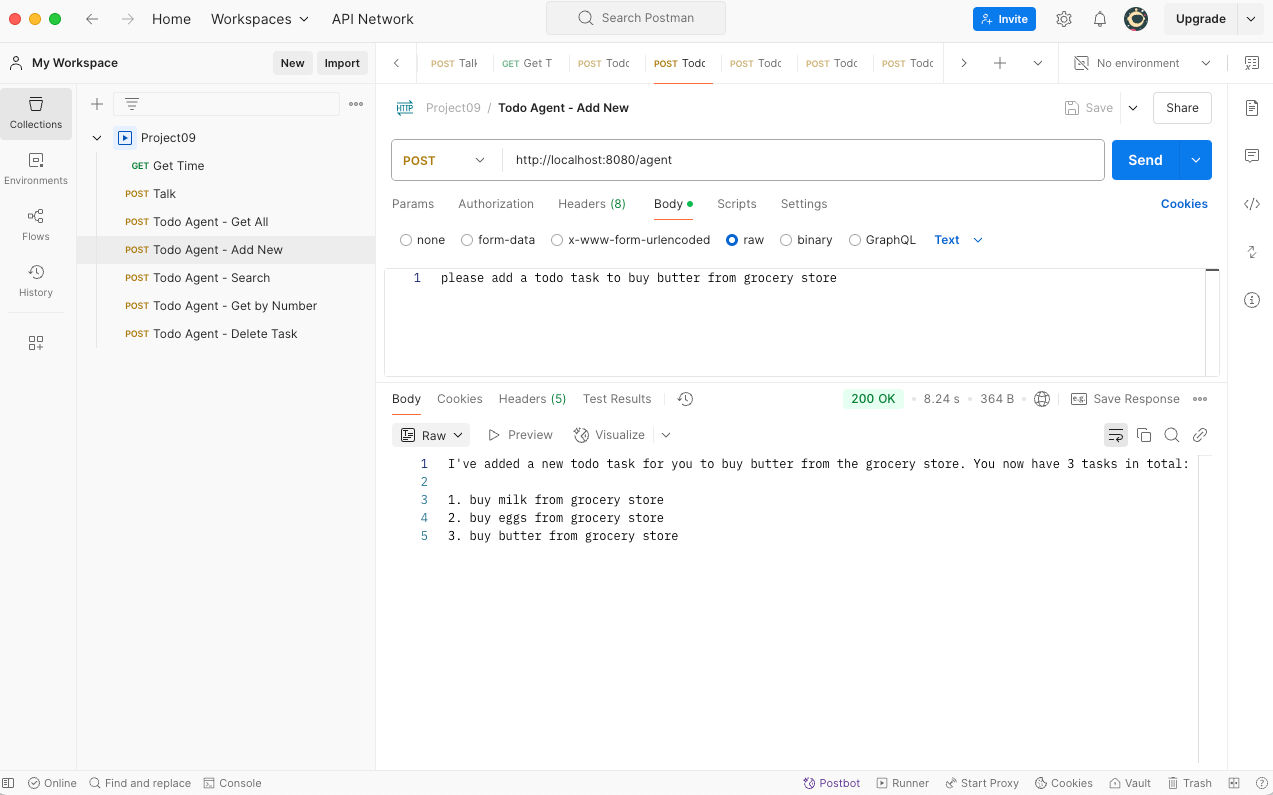

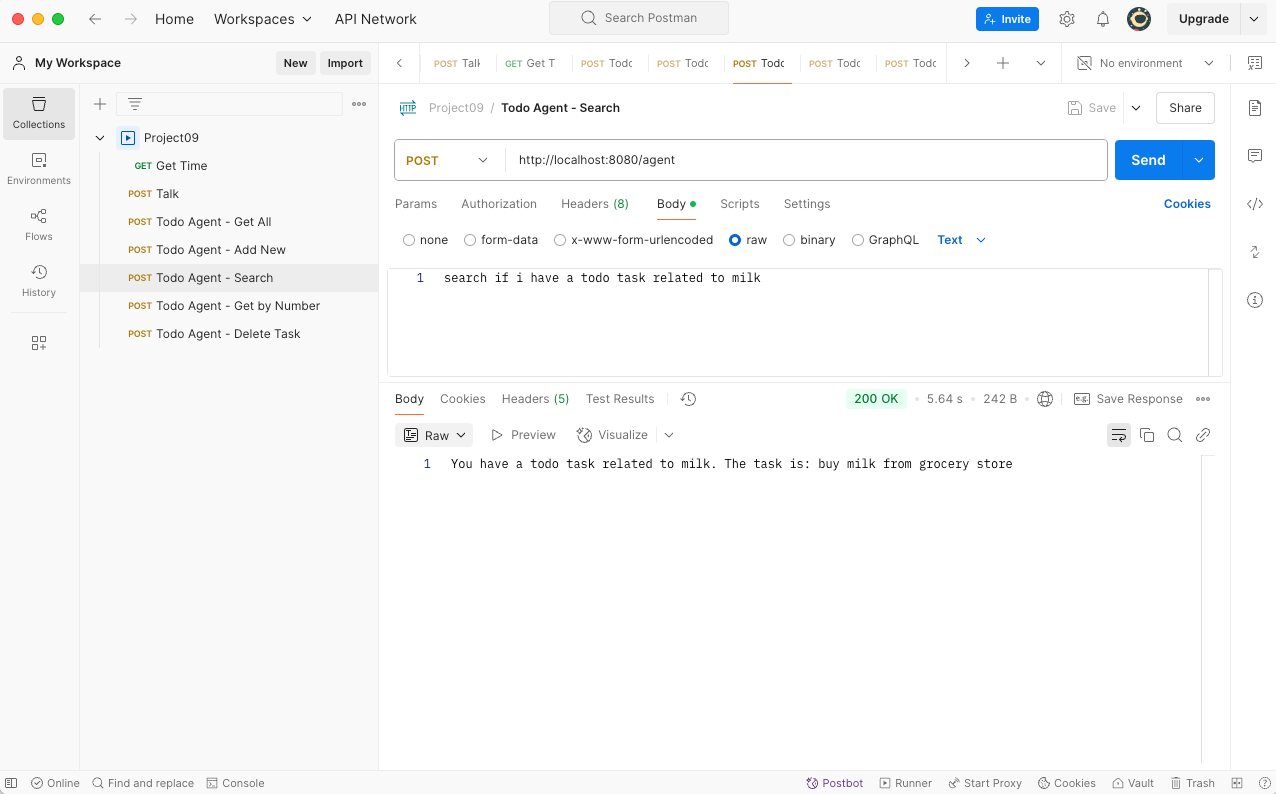

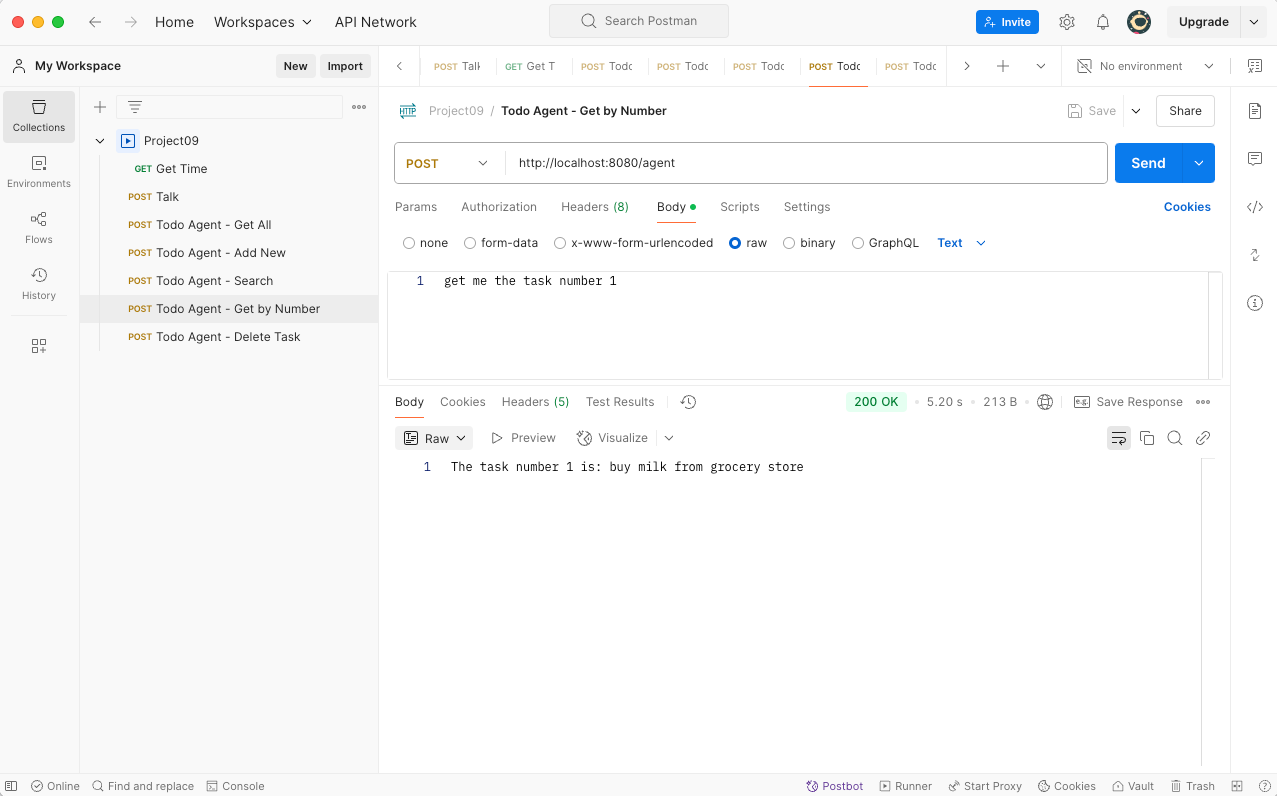

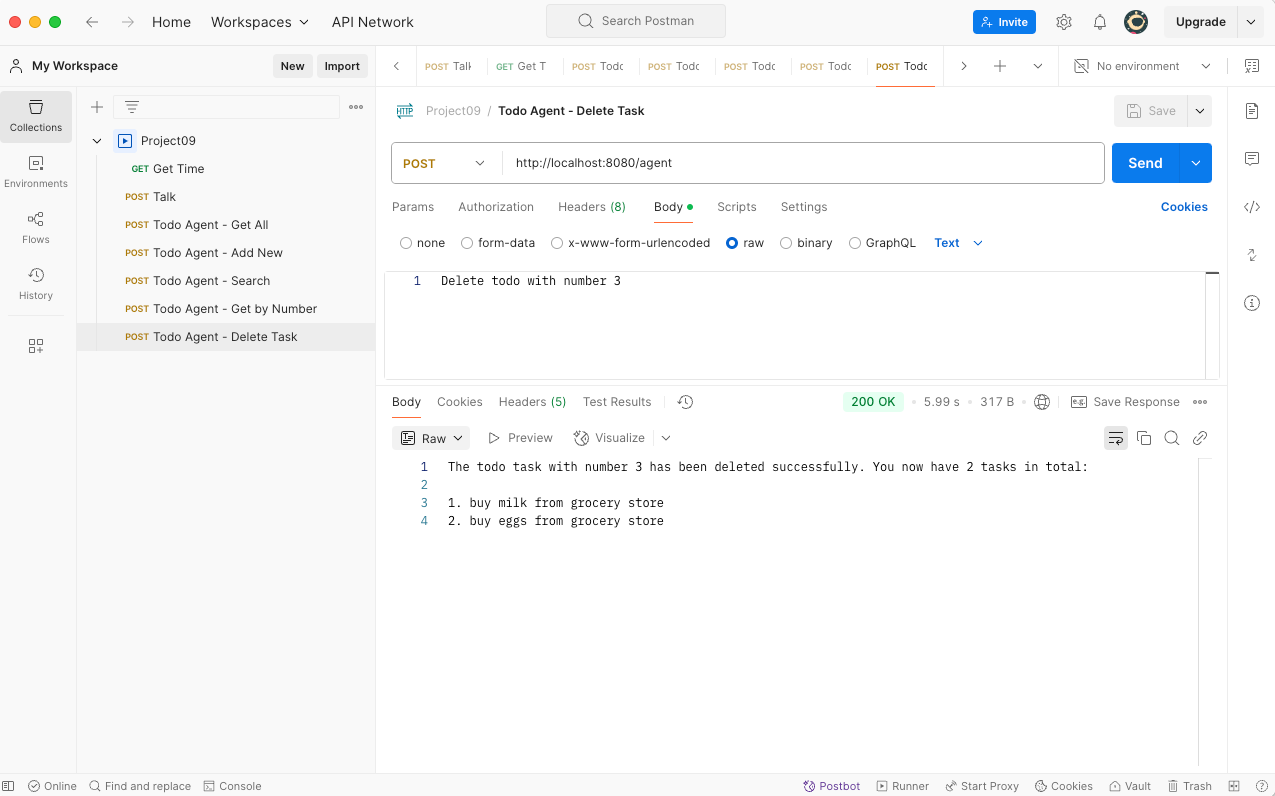

Postman

Import the postman collection to postman

Setup

1# project09

2

3Spring AI with Ollama

4

5### Version

6

7Check version

8

9```bash

10$java --version

11openjdk 21

12```

13

14### Ollama

15

16Download and install ollama

17[https://ollama.com/](https://ollama.com/)

18

19Run the model

20

21```bash

22ollama run llama3.1

23ollama pull mxbai-embed-large

24```

25

26### Postgres DB

27

28```bash

29docker run -p 5432:5432 --name pg-container -e POSTGRES_PASSWORD=password -d postgres:14

30docker ps

31docker exec -it pg-container psql -U postgres -W postgres

32CREATE USER test WITH PASSWORD 'test@123';

33CREATE DATABASE "test-db" WITH OWNER "test" ENCODING UTF8 TEMPLATE template0;

34grant all PRIVILEGES ON DATABASE "test-db" to test;

35

36docker stop pg-container

37docker start pg-container

38```

39

40### Dev

41

42To run the backend in dev mode.

43

44```bash

45./gradlew clean build

46./gradlew bootRun

47```References

https://spring.io/projects/spring-ai/

https://docs.spring.io/spring-ai/reference/api/chat/ollama-chat.html

comments powered by Disqus