Spring - Apache Ignite

Overview

Spring boot application with apache ignite integration

Github: https://github.com/gitorko/project91

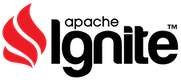

Apache Ignite

Apache Ignite is a distributed database. Data in Ignite is stored in-memory and/or on-disk, and is either partitioned or replicated across a cluster of multiple nodes.

Features

- Key-value Store

- In-Memory Cache

- In-Memory Data Grid - Data stored on multiple nodes

- In-Memory Database - Disk based persistence

- ACID transactions - Only at the key-value level

- SQL queries - Does not support foreign key constraints

- Stream processing

- Distributed compute - Uses co-located data

- Messaging queue

- Multi-tier storage - Storage in postgres, mysql

- Distributed SQL (Sql queries to fetch data stored on different nodes)

Apache Ignite Setup

- Embedded server

- Embedded client

- Cluster setup

Apache Ignite automatically synchronizes the changes with the database in an asynchronous, background task If an entity is not cached it is read from the database and put to the cache for future use.

Terminology

- Cache Mode

- Partition Cache (Default) - Data is partitioned and each partition is stored on different node (Fast write)

- Replicated Cache - Data will be replicated across all cache (Slow write)

- Affinity Function - Determines the partition data belongs to

- Backup - Backup of partition is stored with primary and secondary owner

- Atomicity Mode

- Atomic (Default) - Operations performed atomically, one at a time. Transactions are not supported.

- Transactional - ACID-compliant transactions (Slow)

- Eviction - LRU

- When persistence is

offsome data is evicted & lost - When persistence is

onthen page is evicted from memory but data is present on disk

- When persistence is

Redis vs Apache Ignite

While Redis stores data in memory, Ignite relies on memory and disk to store data. Hence, Ignite can store much larger amounts of data than Redis

EhCache vs Apache Ignite

Ehcache is more focused on local caching and does not provide built-in support for distributed caching or computing. EhCache primarily intended for single-node caching scenarios.

- Scalability and Distributed Computing - Easily scaled across multiple nodes in a cluster. It allows for data and computation to be distributed across the nodes, providing high availability and fault tolerance.

- Data Partitioning and Replication: - Offers advanced data partitioning and replication capabilities. It automatically partitions the data across multiple nodes in a cluster, ensuring that each node only holds a portion of the overall data set. This enables parallel processing and efficient data retrieval. In addition, Ignite allows for configurable data replication, ensuring data redundancy and fault tolerance.

- Computational Capabilities - Supports running distributed computations across the cluster, allowing for parallel processing and improved performance. It provides APIs for distributed SQL queries, machine learning, and real-time streaming analytics.

- Integration with Other Technologies: Integrates seamlessly with various other technologies and frameworks. It provides connectors and integrations for popular data processing frameworks like Apache Spark, Apache Hadoop, and Apache Cassandra. It also offers support for various persistence stores, such as JDBC, NoSQL databases, and Hadoop Distributed File System (HDFS).

- Transaction Support: Supports distributed transactions, allowing multiple nodes in a cluster to participate in a single transaction. It ensures consistency and isolation across the distributed cache.

- Management and Monitoring Capabilities: Offers a web-based management console for monitoring the cluster status, metrics, and performance.

Code

1package com.demo.project91.config;

2

3import java.sql.Types;

4import java.util.ArrayList;

5import java.util.Collections;

6import java.util.LinkedHashMap;

7import java.util.List;

8import javax.cache.configuration.Factory;

9import javax.sql.DataSource;

10

11import com.demo.project91.pojo.Customer;

12import com.demo.project91.pojo.Employee;

13import org.apache.ignite.Ignite;

14import org.apache.ignite.Ignition;

15import org.apache.ignite.cache.CacheAtomicityMode;

16import org.apache.ignite.cache.CacheMode;

17import org.apache.ignite.cache.QueryEntity;

18import org.apache.ignite.cache.store.jdbc.CacheJdbcPojoStoreFactory;

19import org.apache.ignite.cache.store.jdbc.JdbcType;

20import org.apache.ignite.cache.store.jdbc.JdbcTypeField;

21import org.apache.ignite.cache.store.jdbc.dialect.BasicJdbcDialect;

22import org.apache.ignite.cluster.ClusterState;

23import org.apache.ignite.configuration.CacheConfiguration;

24import org.apache.ignite.configuration.DataPageEvictionMode;

25import org.apache.ignite.configuration.DataRegionConfiguration;

26import org.apache.ignite.configuration.DataStorageConfiguration;

27import org.apache.ignite.configuration.IgniteConfiguration;

28import org.apache.ignite.kubernetes.configuration.KubernetesConnectionConfiguration;

29import org.apache.ignite.spi.communication.tcp.TcpCommunicationSpi;

30import org.apache.ignite.spi.discovery.tcp.TcpDiscoverySpi;

31import org.apache.ignite.spi.discovery.tcp.ipfinder.kubernetes.TcpDiscoveryKubernetesIpFinder;

32import org.apache.ignite.spi.discovery.tcp.ipfinder.multicast.TcpDiscoveryMulticastIpFinder;

33import org.apache.ignite.springdata.repository.config.EnableIgniteRepositories;

34import org.springframework.beans.factory.annotation.Value;

35import org.springframework.context.annotation.Bean;

36import org.springframework.context.annotation.Configuration;

37import org.springframework.jdbc.datasource.DriverManagerDataSource;

38

39@Configuration

40@EnableIgniteRepositories("com.demo.project91.repository")

41public class IgniteConfig {

42

43 /**

44 * Override the node name for each instance at start using properties

45 */

46 @Value("${ignite.nodeName:node0}")

47 private String nodeName;

48

49 @Value("${ignite.kubernetes.enabled:false}")

50 private Boolean k8sEnabled;

51

52 private String k8sApiServer = "https://kubernetes.docker.internal:6443";

53 private String k8sServiceName = "project04";

54 private String k8sNameSpace = "default";

55

56 @Bean(name = "igniteInstance")

57 public Ignite igniteInstance() {

58 Ignite ignite = Ignition.start(igniteConfiguration());

59

60 /**

61 * If data is persisted then have to explicitly set the cluster state to active.

62 */

63 ignite.cluster().state(ClusterState.ACTIVE);

64 return ignite;

65 }

66

67 @Bean(name = "igniteConfiguration")

68 public IgniteConfiguration igniteConfiguration() {

69 IgniteConfiguration cfg = new IgniteConfiguration();

70 /**

71 * Uniquely identify node in a cluster use consistent Id.

72 */

73 cfg.setConsistentId(nodeName);

74

75 cfg.setIgniteInstanceName("my-ignite-instance");

76 cfg.setPeerClassLoadingEnabled(true);

77 cfg.setLocalHost("127.0.0.1");

78 cfg.setMetricsLogFrequency(0);

79

80 cfg.setCommunicationSpi(tcpCommunicationSpi());

81 if (k8sEnabled) {

82 cfg.setDiscoverySpi(tcpDiscoverySpiKubernetes());

83 } else {

84 cfg.setDiscoverySpi(tcpDiscovery());

85 }

86 cfg.setDataStorageConfiguration(dataStorageConfiguration());

87 cfg.setCacheConfiguration(cacheConfiguration());

88 return cfg;

89 }

90

91 @Bean(name = "cacheConfiguration")

92 public CacheConfiguration[] cacheConfiguration() {

93 List<CacheConfiguration> cacheConfigurations = new ArrayList<>();

94 cacheConfigurations.add(getAccountCacheConfig());

95 cacheConfigurations.add(getCustomerCacheConfig());

96 cacheConfigurations.add(getCountryCacheConfig());

97 cacheConfigurations.add(getEmployeeCacheConfig());

98 return cacheConfigurations.toArray(new CacheConfiguration[cacheConfigurations.size()]);

99 }

100

101 private CacheConfiguration getAccountCacheConfig() {

102 /**

103 * Ignite table to store Account data

104 */

105 CacheConfiguration cacheConfig = new CacheConfiguration();

106 cacheConfig.setAtomicityMode(CacheAtomicityMode.ATOMIC);

107 cacheConfig.setCacheMode(CacheMode.REPLICATED);

108 cacheConfig.setName("account-cache");

109 cacheConfig.setStatisticsEnabled(true);

110 QueryEntity qe = new QueryEntity();

111 qe.setTableName("ACCOUNTS");

112 qe.setKeyFieldName("ID");

113 qe.setKeyType("java.lang.Long");

114 qe.setValueType("java.lang.Object");

115 LinkedHashMap map = new LinkedHashMap();

116 map.put("ID", "java.lang.Long");

117 map.put("amount", "java.lang.Double");

118 map.put("updateDate", "java.util.Date");

119 qe.setFields(map);

120 cacheConfig.setQueryEntities(List.of(qe));

121 return cacheConfig;

122 }

123

124 private CacheConfiguration<Long, Customer> getCustomerCacheConfig() {

125 /**

126 * Customer cache to store Customer.class objects

127 */

128 CacheConfiguration<Long, Customer> cacheConfig = new CacheConfiguration("customer-cache");

129 cacheConfig.setIndexedTypes(Long.class, Customer.class);

130 return cacheConfig;

131 }

132

133 private CacheConfiguration getCountryCacheConfig() {

134 /**

135 * Country cache to store key value pair

136 */

137 CacheConfiguration cacheConfig = new CacheConfiguration("country-cache");

138 /**

139 * This cache will be stored in non-persistent data region

140 */

141 cacheConfig.setDataRegionName("my-data-region");

142 return cacheConfig;

143 }

144

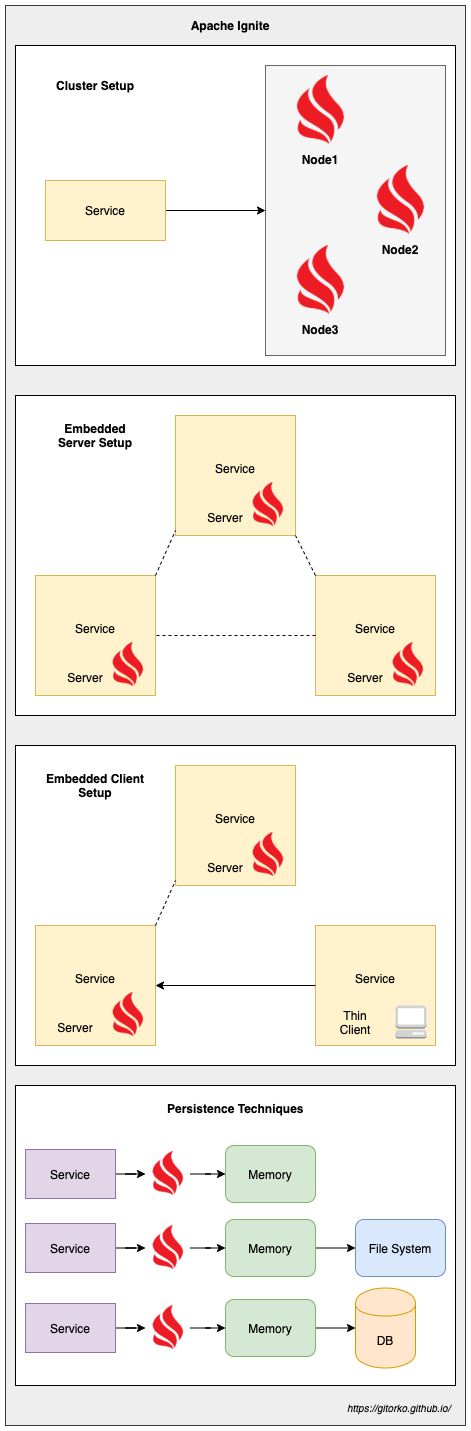

145 private CacheConfiguration<Long, Employee> getEmployeeCacheConfig() {

146 /**

147 * Employee cache to store Employee.class objects

148 */

149 CacheConfiguration<Long, Employee> cacheConfig = new CacheConfiguration("employee-cache");

150 cacheConfig.setIndexedTypes(Long.class, Employee.class);

151 cacheConfig.setCacheStoreFactory(cacheJdbcPojoStoreFactory());

152 /**

153 * If value not present in cache then fetch from db and store in cache

154 */

155 cacheConfig.setReadThrough(true);

156 /**

157 * If value present in cache then write to db.

158 */

159 cacheConfig.setWriteThrough(true);

160 /**

161 * Will wait for sometime to update db asynchronously

162 */

163 cacheConfig.setWriteBehindEnabled(true);

164 /**

165 * Min 2 entires in cache before written to db

166 */

167 cacheConfig.setWriteBehindFlushSize(2);

168 /**

169 * Write to DB at interval delay of 2 seconds

170 */

171 cacheConfig.setWriteBehindFlushFrequency(2000);

172 cacheConfig.setIndexedTypes(Long.class, Employee.class);

173 return cacheConfig;

174 }

175

176 private CacheJdbcPojoStoreFactory cacheJdbcPojoStoreFactory() {

177 CacheJdbcPojoStoreFactory<Long, Employee> factory = new CacheJdbcPojoStoreFactory<>();

178 factory.setDialect(new BasicJdbcDialect());

179

180 //factory.setDataSourceFactory(getDataSourceFactory());

181 factory.setDataSourceFactory(new DbFactory());

182 JdbcType employeeType = getEmployeeJdbcType();

183 factory.setTypes(employeeType);

184 return factory;

185 }

186

187 /**

188 * Nodes discover each other over this port

189 */

190 private TcpDiscoverySpi tcpDiscovery() {

191 TcpDiscoverySpi tcpDiscoverySpi = new TcpDiscoverySpi();

192 TcpDiscoveryMulticastIpFinder ipFinder = new TcpDiscoveryMulticastIpFinder();

193 ipFinder.setAddresses(Collections.singletonList("127.0.0.1:47500..47509"));

194 tcpDiscoverySpi.setIpFinder(ipFinder);

195 tcpDiscoverySpi.setLocalPort(47500);

196 // Changing local port range. This is an optional action.

197 tcpDiscoverySpi.setLocalPortRange(9);

198 //tcpDiscoverySpi.setLocalAddress("localhost");

199 return tcpDiscoverySpi;

200 }

201

202 private TcpDiscoverySpi tcpDiscoverySpiKubernetes() {

203 TcpDiscoverySpi spi = new TcpDiscoverySpi();

204 KubernetesConnectionConfiguration kcfg = new KubernetesConnectionConfiguration();

205 kcfg.setNamespace(k8sNameSpace);

206 kcfg.setMasterUrl(k8sApiServer);

207 TcpDiscoveryKubernetesIpFinder ipFinder = new TcpDiscoveryKubernetesIpFinder(kcfg);

208 ipFinder.setServiceName(k8sServiceName);

209 spi.setIpFinder(ipFinder);

210 return spi;

211 }

212

213 /**

214 * Nodes communicate with each other over this port

215 */

216 private TcpCommunicationSpi tcpCommunicationSpi() {

217 TcpCommunicationSpi communicationSpi = new TcpCommunicationSpi();

218 communicationSpi.setMessageQueueLimit(1024);

219 communicationSpi.setLocalAddress("localhost");

220 communicationSpi.setLocalPort(48100);

221 communicationSpi.setSlowClientQueueLimit(1000);

222 return communicationSpi;

223 }

224

225 private DataStorageConfiguration dataStorageConfiguration() {

226 DataStorageConfiguration dsc = new DataStorageConfiguration();

227 DataRegionConfiguration defaultRegionCfg = new DataRegionConfiguration();

228 DataRegionConfiguration regionCfg = new DataRegionConfiguration();

229

230 defaultRegionCfg.setName("default-data-region");

231 defaultRegionCfg.setInitialSize(10 * 1024 * 1024); //10MB

232 defaultRegionCfg.setMaxSize(50 * 1024 * 1024); //50MB

233

234 /**

235 * The cache will be persisted on default region

236 */

237 defaultRegionCfg.setPersistenceEnabled(true);

238

239 /**

240 * Eviction mode

241 */

242 defaultRegionCfg.setPageEvictionMode(DataPageEvictionMode.RANDOM_LRU);

243

244 regionCfg.setName("my-data-region");

245 regionCfg.setInitialSize(10 * 1024 * 1024); //10MB

246 regionCfg.setMaxSize(50 * 1024 * 1024); //50MB

247 /**

248 * Cache in this region will not be persisted

249 */

250 regionCfg.setPersistenceEnabled(false);

251

252 dsc.setDefaultDataRegionConfiguration(defaultRegionCfg);

253 dsc.setDataRegionConfigurations(regionCfg);

254

255 return dsc;

256 }

257

258 /**

259 * Since it serializes you cant pass variables. Use the DbFactory.class

260 */

261 private Factory<DataSource> getDataSourceFactory() {

262 return () -> {

263 DriverManagerDataSource driverManagerDataSource = new DriverManagerDataSource();

264 driverManagerDataSource.setDriverClassName("org.postgresql.Driver");

265 driverManagerDataSource.setUrl("jdbc:postgresql://localhost:5432/test-db");

266 driverManagerDataSource.setUsername("test");

267 driverManagerDataSource.setPassword("test@123");

268 return driverManagerDataSource;

269 };

270 }

271

272 private JdbcType getEmployeeJdbcType() {

273 JdbcType employeeType = new JdbcType();

274 employeeType.setCacheName("employee-cache");

275 employeeType.setDatabaseTable("employee");

276 employeeType.setKeyType(Long.class);

277 employeeType.setKeyFields(new JdbcTypeField(Types.BIGINT, "id", Long.class, "id"));

278 employeeType.setValueFields(

279 new JdbcTypeField(Types.BIGINT, "id", Long.class, "id"),

280 new JdbcTypeField(Types.VARCHAR, "name", String.class, "name"),

281 new JdbcTypeField(Types.VARCHAR, "email", String.class, "email")

282 );

283 employeeType.setValueType(Employee.class);

284 return employeeType;

285 }

286

287}

1package com.demo.project91.config;

2

3import org.apache.ignite.cache.spring.SpringCacheManager;

4import org.apache.ignite.configuration.IgniteConfiguration;

5import org.springframework.cache.annotation.EnableCaching;

6import org.springframework.context.annotation.Bean;

7import org.springframework.context.annotation.Configuration;

8

9@Configuration

10@EnableCaching

11public class SpringCacheConfig {

12 @Bean

13 public SpringCacheManager cacheManager() {

14 SpringCacheManager cacheManager = new SpringCacheManager();

15 cacheManager.setConfiguration(getSpringCacheIgniteConfiguration());

16 return cacheManager;

17 }

18

19 private IgniteConfiguration getSpringCacheIgniteConfiguration() {

20 return new IgniteConfiguration()

21 .setIgniteInstanceName("spring-ignite-instance")

22 .setMetricsLogFrequency(0);

23 }

24}

1package com.demo.project91.config;

2

3import java.io.Serializable;

4import javax.cache.configuration.Factory;

5import javax.sql.DataSource;

6

7import org.springframework.beans.factory.annotation.Value;

8import org.springframework.context.annotation.Configuration;

9import org.springframework.jdbc.datasource.DriverManagerDataSource;

10

11@Configuration

12public class DbFactory implements Serializable, Factory<DataSource> {

13

14 private static final long serialVersionUID = -1L;

15

16 @Value("${spring.datasource.url}")

17 private String jdbcUrl;

18

19 @Value("${spring.datasource.username}")

20 private String username;

21

22 @Value("${spring.datasource.password}")

23 private String password;

24

25 @Override

26 public DataSource create() {

27 DriverManagerDataSource driverManagerDataSource = new DriverManagerDataSource();

28 driverManagerDataSource.setDriverClassName("org.postgresql.Driver");

29 driverManagerDataSource.setUrl(jdbcUrl);

30 driverManagerDataSource.setUsername(username);

31 driverManagerDataSource.setPassword(password);

32 return driverManagerDataSource;

33 }

34}

1package com.demo.project91.service;

2

3import java.util.Optional;

4

5import com.demo.project91.pojo.Customer;

6import com.demo.project91.repository.CustomerRepository;

7import lombok.RequiredArgsConstructor;

8import lombok.extern.slf4j.Slf4j;

9import org.springframework.stereotype.Service;

10

11/**

12 * Interact with Ignite via IgniteRepository

13 */

14@Service

15@RequiredArgsConstructor

16@Slf4j

17public class CustomerService {

18

19 final CustomerRepository customerRepository;

20

21 public Customer saveCustomer(Customer customer) {

22 return customerRepository.save(customer.getId(), customer);

23 }

24

25 public Iterable<Customer> getAllCustomers() {

26 return customerRepository.findAll();

27 }

28

29 public Optional<Customer> getCustomerById(Long id) {

30 return customerRepository.findById(id);

31 }

32

33}

1package com.demo.project91.service;

2

3import java.util.ArrayList;

4import java.util.List;

5import javax.cache.Cache;

6

7import com.demo.project91.pojo.Employee;

8import jakarta.annotation.PostConstruct;

9import lombok.RequiredArgsConstructor;

10import org.apache.ignite.Ignite;

11import org.apache.ignite.IgniteCache;

12import org.springframework.stereotype.Service;

13

14/**

15 * Interact with Ignite as key-value store (persistent store)

16 */

17@Service

18@RequiredArgsConstructor

19public class EmployeeService {

20

21 final Ignite ignite;

22 IgniteCache<Long, Employee> cache;

23

24 @PostConstruct

25 public void postInit() {

26 cache = ignite.cache("employee-cache");

27 }

28

29 public Employee save(Employee employee) {

30 cache.put(employee.getId(), employee);

31 return employee;

32 }

33

34 public Iterable<Employee> getAllEmployees() {

35 List<Employee> employees = new ArrayList<>();

36 for (Cache.Entry<Long, Employee> e : cache) {

37 employees.add(e.getValue());

38 }

39 return employees;

40 }

41}

1package com.demo.project91.service;

2

3import java.sql.ResultSet;

4import java.sql.SQLException;

5import java.util.List;

6

7import com.demo.project91.pojo.Company;

8import lombok.RequiredArgsConstructor;

9import lombok.extern.slf4j.Slf4j;

10import org.springframework.cache.annotation.Cacheable;

11import org.springframework.jdbc.core.JdbcTemplate;

12import org.springframework.jdbc.core.RowMapper;

13import org.springframework.stereotype.Service;

14

15/**

16 * Interact with Ignite via Spring @Cacheable abstraction

17 */

18@Service

19@Slf4j

20@RequiredArgsConstructor

21public class CompanyService {

22

23 final JdbcTemplate jdbcTemplate;

24

25 @Cacheable(value = "company-cache")

26 public List<Company> getAllCompanies() {

27 log.info("Fetching company from database!");

28 return jdbcTemplate.query("select * from company", new CompanyRowMapper());

29 }

30

31 public void insertMockData() {

32 log.info("Starting to insert mock data!");

33 for (int i = 0; i < 10000; i++) {

34 jdbcTemplate.update("INSERT INTO company (id, name) " + "VALUES (?, ?)",

35 i, "company_" + i);

36 }

37 log.info("Completed insert of mock data!");

38 }

39

40 class CompanyRowMapper implements RowMapper<Company> {

41 @Override

42 public Company mapRow(ResultSet rs, int rowNum) throws SQLException {

43 return new Company(rs.getLong("id"), rs.getString("name"));

44 }

45 }

46}

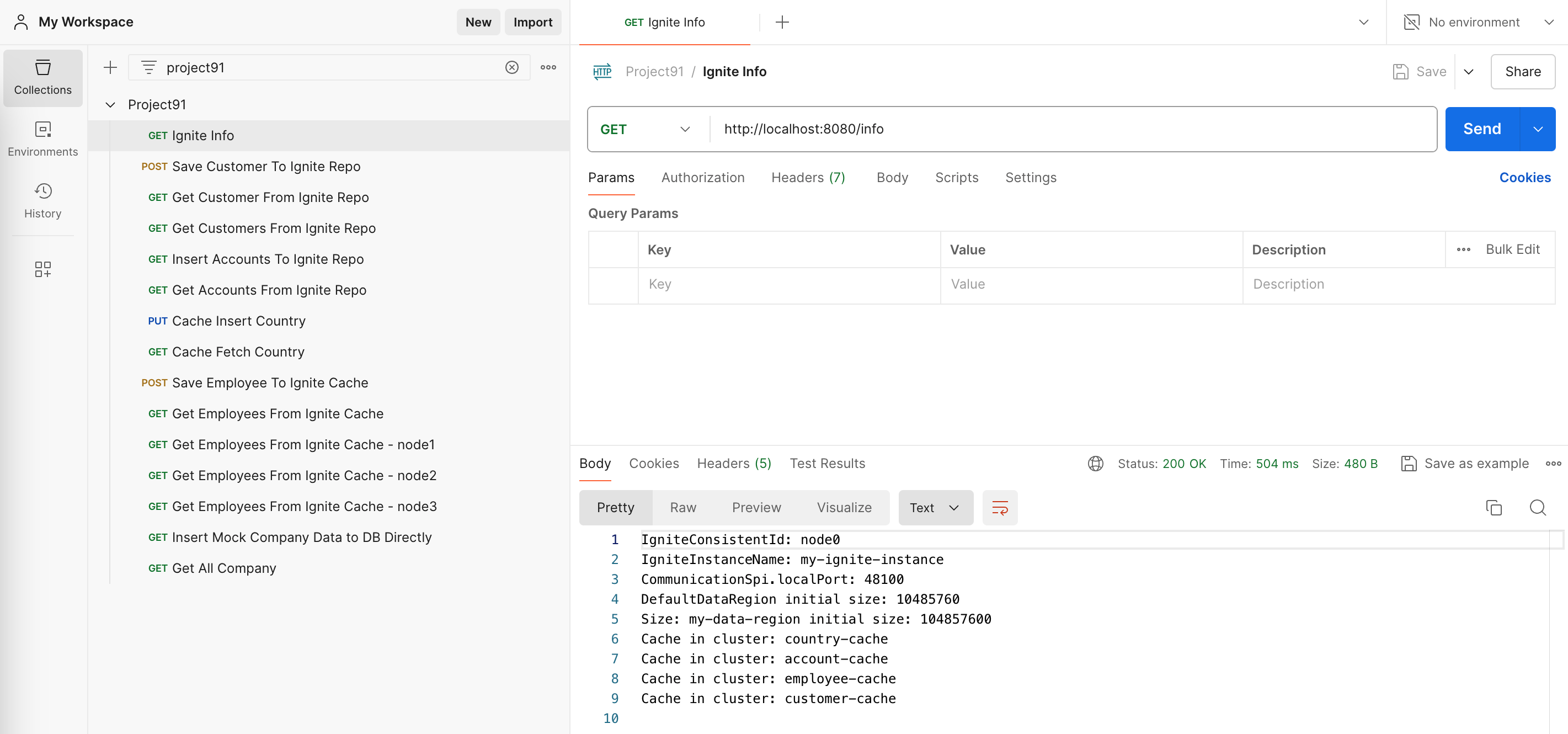

Postman

Import the postman collection to postman

Setup

1# Project 91

2

3Spring Boot - Apache Ignite

4

5[https://gitorko.github.io/spring-boot-apache-ignite/](https://gitorko.github.io/spring-boot-apache-ignite/)

6

7### Version

8

9Check version

10

11```bash

12$java --version

13openjdk 21.0.3 2024-04-16 LTS

14```

15

16### Postgres DB

17

18```

19docker run -p 5432:5432 --name pg-container -e POSTGRES_PASSWORD=password -d postgres:9.6.10

20docker ps

21docker exec -it pg-container psql -U postgres -W postgres

22CREATE USER test WITH PASSWORD 'test@123';

23CREATE DATABASE "test-db" WITH OWNER "test" ENCODING UTF8 TEMPLATE template0;

24grant all PRIVILEGES ON DATABASE "test-db" to test;

25

26docker stop pg-container

27docker start pg-container

28```

29

30### Dev

31

32To run the code.

33

34```bash

35export JAVA_TOOL_OPTIONS="--add-opens=jdk.management/com.sun.management.internal=ALL-UNNAMED \

36--add-opens=java.base/jdk.internal.misc=ALL-UNNAMED \

37--add-opens=java.base/sun.nio.ch=ALL-UNNAMED \

38--add-opens=java.management/com.sun.jmx.mbeanserver=ALL-UNNAMED \

39--add-opens=jdk.internal.jvmstat/sun.jvmstat.monitor=ALL-UNNAMED \

40--add-opens=java.base/sun.reflect.generics.reflectiveObjects=ALL-UNNAMED \

41--add-opens=java.base/java.io=ALL-UNNAMED \

42--add-opens=java.base/java.lang=ALL-UNNAMED \

43--add-opens=java.base/java.nio=ALL-UNNAMED \

44--add-opens=java.base/java.time=ALL-UNNAMED \

45--add-opens=java.base/java.util=ALL-UNNAMED \

46--add-opens=java.base/java.util.concurrent=ALL-UNNAMED \

47--add-opens=java.base/java.util.concurrent.locks=ALL-UNNAMED \

48--add-opens=java.base/java.lang.invoke=ALL-UNNAMED"

49

50./gradlew clean build

51./gradlew bootRun

52./gradlew bootJar

53```

54

55To run many node instances

56

57```bash

58cd build/libs

59java -jar project91-1.0.0.jar --server.port=8081 --ignite.nodeName=node1

60java -jar project91-1.0.0.jar --server.port=8082 --ignite.nodeName=node2

61java -jar project91-1.0.0.jar --server.port=8083 --ignite.nodeName=node3

62

63```

64

65JVM tuning parameters

66

67```bash

68java -jar -Xms1024m -Xmx2048m -XX:MaxDirectMemorySize=256m -XX:+DisableExplicitGC -XX:+UseG1GC -XX:+ScavengeBeforeFullGC -XX:+AlwaysPreTouch project91-1.0.0.jar --server.port=8080 --ignite.nodeName=node0

69```

Issues

Standard CrudRepository save(entity), save(entities), delete(entity) operations aren't supported.

We have to use the save(key, value), save(Map<ID, Entity> values), deleteAll(Iterable

@EnableIgniteRepositories declared on IgniteConfig: Can not perform the operation because the cluster is inactive. Note, that the cluster is considered inactive by default if Ignite Persistent Store is used to let all the nodes join the cluster. To activate the cluster call Ignite.cluster().state(ClusterState.ACTIVE).

1Possible too long JVM pause: 418467 milliseconds.

2Blocked system-critical thread has been detected. This can lead to cluster-wide undefined behaviour

GC pauses decreases overall performance. if pause will be longer than failureDetectionTimeout node will be disconnected from cluster. https://apacheignite.readme.io/docs/jvm-and-system-tuning

1 Failed to add node to topology because it has the same hash code for partitioned affinity as one of existing nodes

Instance cant have same node id.

When 3 nodes are running you will see the cluster

1Topology snapshot [ver=3, locNode=2e963fb3, servers=3, clients=0, state=ACTIVE, CPUs=16, offheap=38.0GB, heap=24.0GB]

1Failed to validate cache configuration. Cache store factory is not serializable.

CacheJdbcPojoStoreFactory will be serialized hence needs to implement Serializable